I asked ChatGPT to write Source Codes for the following :

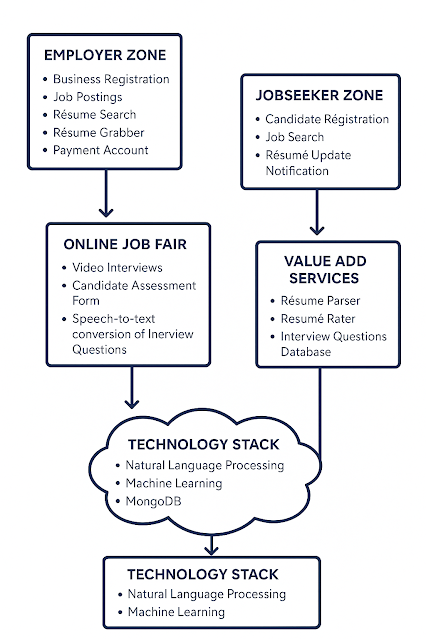

Software Architecture For Online Jobs Fair.. 29 March 2018

STAGE 2 — JOBSEEKER ZONE

1. Candidate Registration

📌 Purpose: Let freshers and professionals register their profiles (optionally via LinkedIn).

🔧 Stack:

-

Backend: FastAPI

-

Frontend: React

-

DB: MongoDB

-

LinkedIn OAuth: using

authlibor Firebase Auth

🔑 API Endpoints:

-

POST /api/candidate/register -

POST /api/candidate/linkedin-auth -

GET /api/candidate/{id}

📄 FastAPI Code (Freshers vs Experienced)

💡 LinkedIn Autofill Option: Use LinkedIn API to fetch profile, then pre-fill the form using JavaScript fetch or Firebase Auth.

2. Candidate Job Search

📌 Purpose: Show personalized job results based on profile match (AI-driven).

🔧 Additions:

-

Job-candidate match via cosine similarity (like before)

-

Auto-fill search filters based on candidate profile

-

Fast text search via SOLR/Elasticsearch

📄 FastAPI Job Search Endpoint

3. Résumé Update Notification

📌 Purpose: Automatically notify candidates to update outdated resumes.

🔧 Stack Additions:

-

Email automation via SendGrid or SMTP

-

Triggering parser if resume is old or unmatched

📄 Trigger Notification Function

4. UI Mockup Suggestions (Jobseeker Dashboard)

We can generate these visually next if needed:

-

Welcome Panel (shows match score, alerts)

-

Job Recommendations List (with match %

-

Profile Completion Tracker

-

Resume Upload / Parser Keyword Review

5. NLP Skill Extraction (Optional Enhancement)

Use keyword extraction from uploaded résumé to pre-populate skills.

📄 Skill Extractor using spaCy

Where SKILL_SET = set of known tech/soft skills scraped or curated from job data.

STAGE 3 — ONLINE JOB FAIR MODULE

✅ OVERVIEW

Components:

-

Video Interviews (live, real-time panel)

-

Candidate Assessment Forms (rating + remarks)

-

Speech-to-Text Conversion of Interview Questions

-

Interactive Whiteboard for Coding/Notes

Modern Stack Suggestions:

| Function | Recommended Stack / Tool |

|---|---|

| Video Interview | 100ms / Agora / Jitsi Meet + WebRTC |

| STT Conversion | [Whisper API (OpenAI)] or Vosk – local Python STT |

| Whiteboard | Excalidraw / Ziteboard embeddable |

| Assessment Forms | FastAPI backend + MongoDB for storing structured ratings |

| Frontend UI | React + TailwindCSS + WebSockets for live events |

1. 🔴 VIDEO INTERVIEWS (Live with multi-user panel)

🔧 Option 1: Embed Jitsi Meet (Open-source & Free)

🔧 Option 2: Use 100ms or Agora SDK (paid but scalable with mobile support)

📄 Basic Jitsi Integration in React (Frontend)

2. 📝 CANDIDATE ASSESSMENT FORM

📌 Functionality:

-

Each interviewer fills a form

-

Fields: Communication, Technical, Attitude, Final Rating

-

Stored & aggregated per candidate

📄 MongoDB Schema

📄 FastAPI Endpoint

3. 🗣️ SPEECH-TO-TEXT (STT) FOR INTERVIEW QUESTIONS

🧠 Suggestion:

-

Use Whisper (OpenAI) for high accuracy

-

Alternate: Vosk for offline inference

📄 Whisper API Example (Python)

📄 FastAPI Endpoint for Upload + Transcript

4. 🧑🏫 INTERACTIVE WHITEBOARD

💡 Options:

-

Embed Excalidraw (open-source drawing tool)

-

Embed Ziteboard (with a session URL per interview)

📄 Embed Excalidraw in React

📊 COMBINED DASHBOARD FLOW (Optional UI Elements)

| Section | Feature |

|---|---|

| Video Room | Jitsi/100ms embedded |

| Whiteboard | Side panel with Excalidraw for notes or diagrams |

| Question Capture | Real-time STT transcript below video window |

| Assessment | Interviewer panel below with form fields |

| Chat/Docs | File share, note-taking, transcript download options |

STAGE 4 — VALUE ADD SERVICES MODULES

Modules Covered:

-

Résumé Parser

-

Résumé Rater

-

Interview Questions Database

1️⃣ RÉSUMÉ PARSER

📌 Purpose: Extract structured data (skills, education, experience) from unstructured résumés.

🔧 Tech Stack:

-

Parser: Python +

pdfminer,docx,PyMuPDF -

NLP:

spaCy,re, ortransformersfor Named Entity Recognition -

Storage: MongoDB (schemaless)

📄 Key Python Parser Logic

📄 FastAPI Upload Endpoint

2️⃣ RÉSUMÉ RATER

📌 Purpose: Give a percentage score showing how well a résumé matches a job posting.

💡 Logic:

-

Extract skills from both résumé and JD

-

Compute overlap %

-

Use Sentence Embeddings for smarter matching

📄 Basic Scoring Engine

📄 FastAPI Endpoint

3️⃣ INTERVIEW QUESTIONS DATABASE

📌 Purpose: A searchable repository of:

-

Past questions (from STT in live interviews)

-

Crowdsourced Q&A from recruiters/candidates

🔧 Stack:

-

MongoDB for storage

-

Full-text search using MongoDB Atlas Search or Elasticsearch

-

Optional: Tag-based filtering and contributor attribution

📄 MongoDB Schema

📄 FastAPI Endpoints

🧠 FUTURE ADD-ONS (For All Value Services)

| Feature | Tools / APIs |

|---|---|

| AI-based Q&A rating | LLM (GPT or Claude) scoring the quality of answer |

| Resume visualization | Radar graph or spider chart of candidate vs job fit |

| Candidate insights dashboard | Behavioral + skills + historical interview record |

| Export to PDF or Excel | Resume reports with match scores + keyword highlights |

No comments:

Post a Comment