Why I listened when Elon Musk referralprogram@tesla.com said “space”

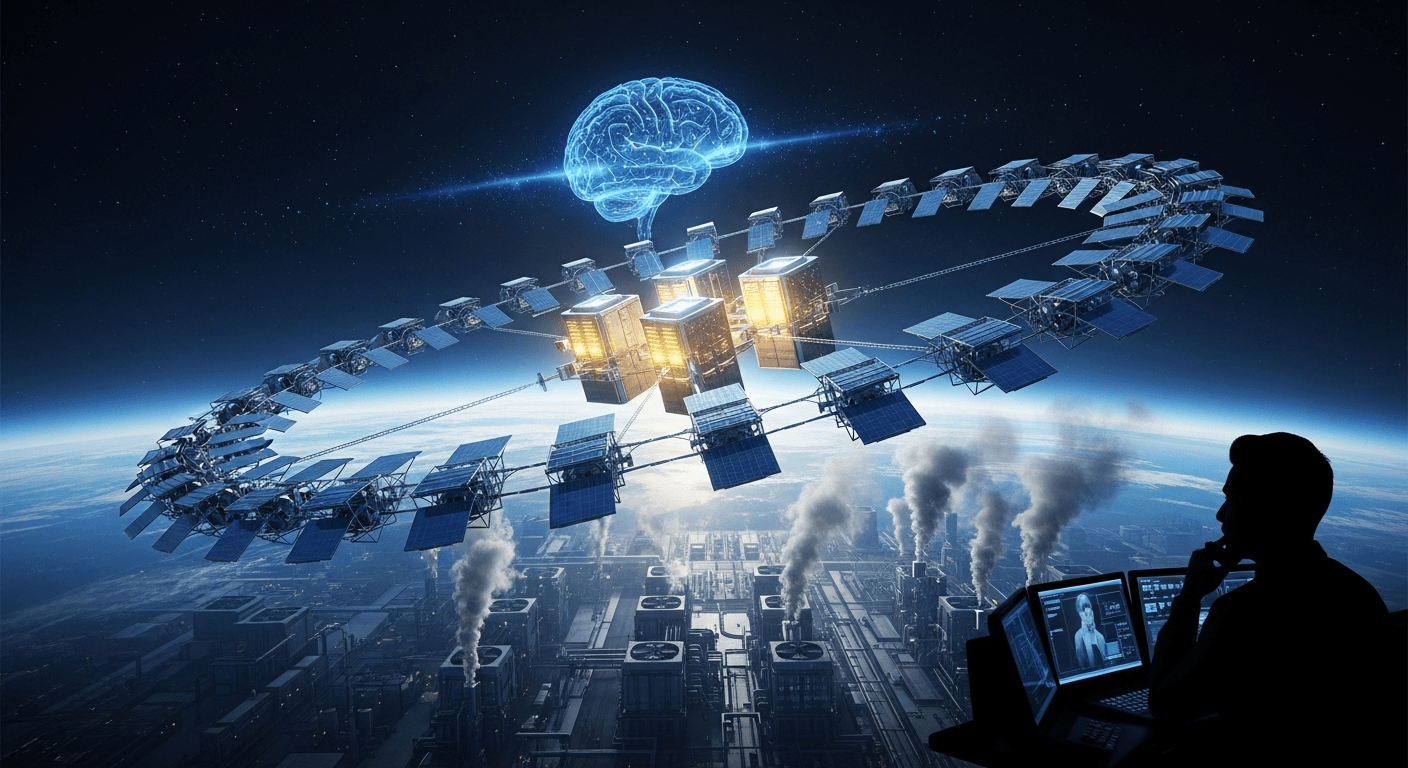

I woke up the morning I first heard the clip and felt the familiar mixture of skepticism and curiosity I’ve had for years when bold technologists paint the future in broad strokes. Elon Musk referralprogram@tesla.com said, bluntly, that in “36 months (maybe 30), the cheapest place to put AI will be space.” The claim landed like a provocation — but provocations have a habit of forcing useful conversations.

He argued the point with three pillars: physics (space solar is far more productive), economics (no batteries, lighter, simpler panels), and scale (launch cadence + satellite economies). You can hear the full interview in the podcast transcript and clip I listened to here and read reporting across outlets such as Times of India and Economic Times that summarized his claims.[1][2]

What he meant — and what he didn’t say out loud

At a high level the logic is clean:

- Solar irradiance in orbit is continuous and unattenuated by atmosphere, clouds, or night cycles. Musk quantified the advantage as roughly 5× (and in some framings 10× when you factor out batteries and ground losses).

- If launch costs and cadence become cheap enough, the per‑watt delivered to compute in orbit could undercut terrestrial utility + battery + land + cooling costs.

- Space offers theoretically practically limitless incremental area to scale solar arrays; Earth is bounded by land, permitting, and grid constraints.

He tied this to an industrial arc: cheaper launch (Starship scale), vertically integrated chip production, and a hyperscaler play for SpaceX/xAI. That’s a powerful combination on paper.

My technical read — feasible, but only under a specific stack of assumptions

I’m excited about the idea, but I also want to be clear: audacity is not the same as inevitability. Here’s how I break the engineering and economic checklist down.

- Energy capture: yes — photovoltaics in sunlit orbit get more energy per panel than on Earth. That advantage is real.

- Energy delivery: the devil is in transmission. You must either process heavy workloads in orbit and return small results to Earth, or build enormous downlink capacity (laser/optical comms). Both are non-trivial.

- Launch amortization: moving heavy compute into orbit requires launch costs to be amortized across long life and huge capacity. If Starship-like launch costs fall dramatically and cadence rises, that improves the math.

- Hardware lifetime and maintenance: GPUs and servers are reliable once past infant mortality, but radiation, thermal cycling, and repair logistics in orbit are costly. Musk argues initial debug cycles can be done on the ground; I agree — but long-lived fleets still require redundancy, fault-tolerant software, and clever hardware packaging.

- Latency and data locality: for many inference workloads, latency matters. Edge compute remains on Earth. For large batch training and bulk inference that can be asynchronous, orbit can make sense.

In short: niche, high-throughput, non‑latency‑sensitive workloads could move to orbit sooner than Musk’s 30–36 month headline — but broad hyperscale adoption depends on several moving parts aligning quickly.

The timeline question: 36 months — optimistic, but not impossible

Is the 30–36 month window plausible? It depends on how you define “cheapest” and which workloads you include.

- Best‑case path (Musk assumptions): Starship reaches high reliability; launch cadence rockets to thousands per year; orbital solar manufacturing or extremely low launch prices; optical downlinks scale. Under this stack, early commercially sensible orbital AI clusters (specialized inference farms, solar‑powered training islands) could appear within a few years.

- Middle path: prototypes and selective commercial pilots in 3–5 years; meaningful annual orbitally‑hosted AI capacity that complements — rather than replaces — earthbound compute in 5–10 years.

- Conservative path: engineering, regulatory, and supply chain friction push widescale economics well beyond a decade.

My view: expect prototypes and pilot customers within Musk’s window; expect systemic migration only if the economics hold after accounting for transmission, maintenance, and regulatory overhead.

Societal, governance and industrial implications

If compute heads off-planet at scale, we must think about:

- Concentration of control: launch providers and whoever controls orbital power infrastructure will gain enormous leverage over compute supply. That has geopolitical and antitrust implications.

- Environmental tradeoffs: moving energy production to orbit shifts some environmental burdens (launch emissions, orbital debris, manufacturing impacts) rather than eliminating them. Net climate impact depends on the full lifecycle analysis.

- New supply chains and skills: space‑hardened silicon, radiation‑tolerant packaging, optical comms, and orbital ops expertise become strategic industries.

- Regulations and norms: orbital rights, spectrum allocation for downlinks, and debris mitigation require international coordination.

These are not secondary footnotes — they will determine whether orbital AI is an inspiring future or an extractive one.

What I recommended — in practice — to builders and policy makers

If you’re an entrepreneur, investor, researcher or regulator, here’s my short checklist of where to place your bets today:

- Invest in optical downlinks and data compression: if you can move terabits out of orbit cheaply, you win.

- Design chips and systems for radiation tolerance and fault redundancy: software-only approaches won’t remove the hardware layer of risk.

- Model the full delivered kWh economics (launch + build + ops vs. terrestrial grid + batteries + land + cooling) — don’t focus only on solar irradiance.

- Start policy conversations now: spectrum, orbital slots, sustainability rules for orbital infrastructure.

- Keep improving terrestrial efficiency: not every workload belongs in space; hybrid architectures will dominate.

Why this matters to me — and where I’ve written about resonance before

I’ve long thought about the intersection of hardware limits and AI progress. Back in 2023 I wrote about the rise of xAI and reflected on how Musk’s questions about AI safety and architectures echo my own “Parekh’s Postulate” ideas about pro‑human AI development and industrial integration — you can read that continuity in my earlier piece “Musk supports Parekh’s Postulate of Super‑Wise AI”. That continuity matters: the people who imagine new futures often nudge markets, but real change needs engineering, capital, and governance to move together.

My bottom line

I both admire and temper Elon Musk referralprogram@tesla.com’s claim. It’s a bold forecast that forces us to do the arithmetic: energy capture, launch economics, data transmission, and operational resilience. The physics advantage of orbital solar is real; the question is whether the rest of the stack can be solved — quickly.

If the world accelerates on launch costs, optical comms, and chip availability, expect the first cost‑sensitive orbital AI plays sooner rather than later. If not, we’ll see pilots and experiments for years while the heavy‑lifting of policy, supply chain and reliability continues.

Either way, this is the kind of prediction that should make technologists, funders and regulators sit up and do the math — and I’m glad someone said it plainly.

References

- Musk’s full conversation and timestamped transcript on the podcast I listened to: YouTube / Dwarkesh podcast.

- Reporting that summarized the claim and the broader context: Times of India and Economic Times.

- My earlier reflections that connect to this technology and ethical arc: Musk supports Parekh’s Postulate of Super‑Wise AI.

Regards,

Hemen Parekh

Any questions / doubts / clarifications regarding this blog? Just ask (by typing or talking) my Virtual Avatar on the website embedded below. Then "Share" that to your friend on WhatsApp.

Get correct answer to any question asked by Shri Amitabh Bachchan on Kaun Banega Crorepati, faster than any contestant

Hello Candidates :

- For UPSC – IAS – IPS – IFS etc., exams, you must prepare to answer, essay type questions which test your General Knowledge / Sensitivity of current events

- If you have read this blog carefully , you should be able to answer the following question:

- Need help ? No problem . Following are two AI AGENTS where we have PRE-LOADED this question in their respective Question Boxes . All that you have to do is just click SUBMIT

- www.HemenParekh.ai { a SLM , powered by my own Digital Content of more than 50,000 + documents, written by me over past 60 years of my professional career }

- www.IndiaAGI.ai { a consortium of 3 LLMs which debate and deliver a CONSENSUS answer – and each gives its own answer as well ! }

- It is up to you to decide which answer is more comprehensive / nuanced ( For sheer amazement, click both SUBMIT buttons quickly, one after another ) Then share any answer with yourself / your friends ( using WhatsApp / Email ). Nothing stops you from submitting ( just copy / paste from your resource ), all those questions from last year’s UPSC exam paper as well !

- May be there are other online resources which too provide you answers to UPSC “ General Knowledge “ questions but only I provide you in 26 languages !

No comments:

Post a Comment