Introduction

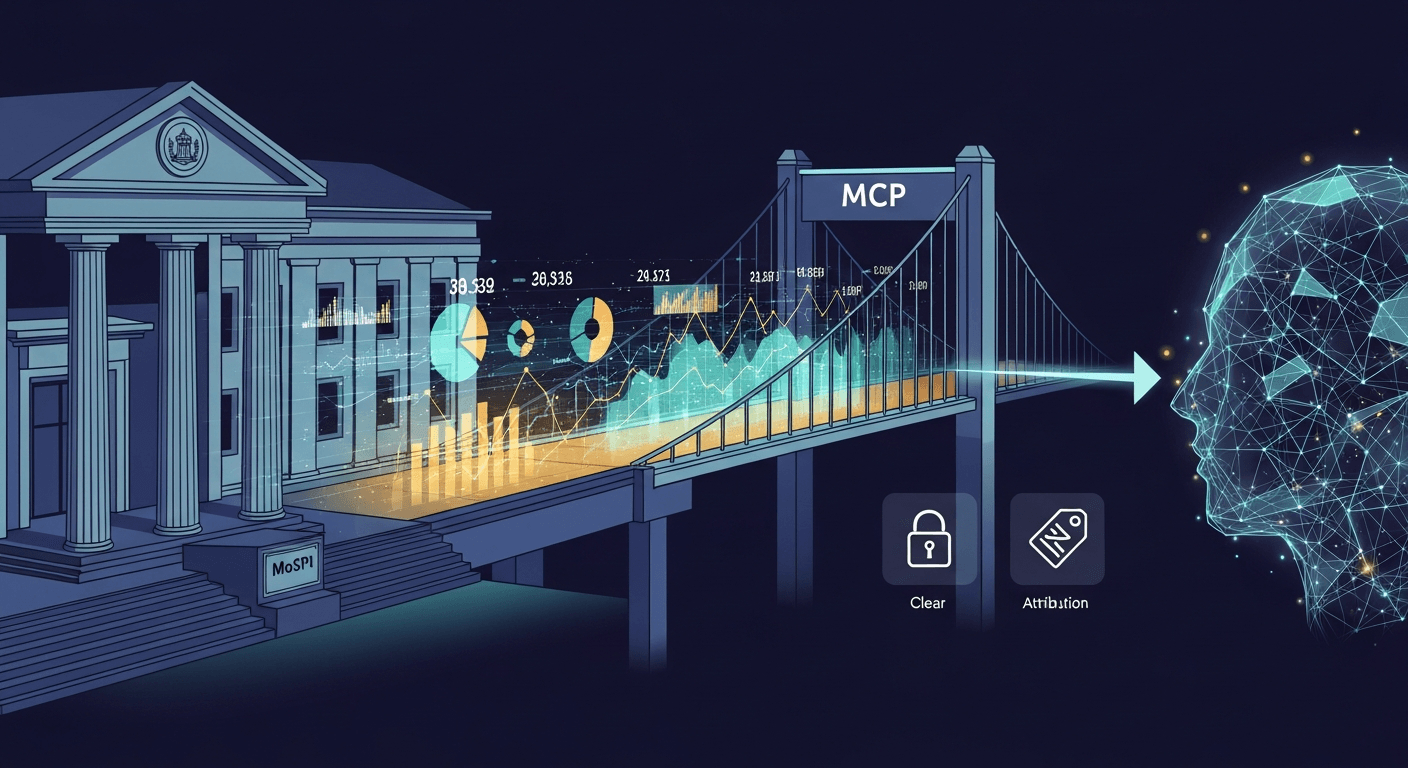

I write often about the thin line between data accessibility and responsible governance. The Ministry of Statistics and Programme Implementation’s (MoSPI) recent beta launch of a Model Context Protocol (MCP) server for the eSankhyiki portal is one of those inflection points that deserves close, pragmatic attention.^1

What the MCP server is

- At a technical level, the MCP server implements an open standard (Model Context Protocol) that lets models and AI tools discover, understand and query published datasets programmatically. In simple terms: instead of downloading CSVs and wrangling files, an AI assistant can call a standardized endpoint and receive attributed, structured government statistics.^1

- The current beta exposes seven core statistical products (PLFS, CPI, ASI, IIP, NAS, WPI and environmental statistics) as a pilot. The codebase and integration patterns are available openly for inspection and contribution.^2

How it links AI tools with government data

The MCP server acts as a translator and catalog:

- Metadata-first discovery: tools call endpoints to list available indicators, dimensions and valid filters. This prevents blind queries that lead to errors or hallucinations.

- Sequential validation workflow: AI clients are encouraged to confirm indicators and parameters before requesting data, reducing malformed queries and timeouts.

- Uniform interface: once connected, multiple datasets are reachable through the same protocol—so assistants, analytic pipelines or dashboards can pull consistent numbers without custom adapters.^2

Benefits (who gains and how)

- Faster research cycles: researchers and policymakers spend less time on ETL and more on interpreting trends.

- Grounded AI outputs: when an assistant cites MoSPI data directly, the response is attributable and verifiable—helpful for reducing misinformation in economic or social reporting.^1

- Interoperability for developers: startups and analytics teams can integrate official stats into models, applications and automated reports with standard clients and examples in the public repo.^2

- Policy agility: immediate access to verified indicators helps scenario analysis during shocks (e.g., inflation spikes, employment changes).

Privacy and security concerns (balanced assessment)

The benefits are real, but they bring new responsibilities:

- Data classification and ACLs: while current public datasets are read-only, the protocol must respect the classification (public, restricted, microdata). Ensuring microdata never becomes inadvertently accessible is paramount.

- Inference and re-identification risks: even aggregated data, when joined with external sources via powerful analytics, can expose sensitive patterns. Governance must set clear limits on dataset joins and published granularity.

- Authentication and rate-limiting: the pilot appears open, but production rollouts should support authentication, quotas and logging so misuse can be detected and throttled.

- Provenance and attribution: AI replies should include dataset citations and timestamps to avoid stale or misleading claims. The MCP design encourages attribution; implementation must make it unavoidable.

Potential use cases (concrete scenarios)

- Real-time policy dashboards: ministries and state governments can plug live NSO indicators into decision-support systems to monitor program outcomes.

- Journalism and fact-checking: newsrooms can embed verified inflation, employment or production metrics directly into stories and visualizations.

- Private sector planning: businesses can automate market-scan reports that combine official indicators with firm-level signals for faster strategy cycles.

- Academic reproducibility: scholars can script data pulls that are versioned and attributable, improving reproducibility of empirical work.

Implementation timeline and pragmatics

- Pilot scale: MoSPI launched a beta with a carefully chosen seven-product pilot—this helps iterate without endangering sensitive systems.^1

- Progressive onboarding: expect a phased expansion of datasets, guided by technical readiness and policy review. Not every dataset needs the same exposure model; microdata likely remains controlled.

- Developer tooling: public documentation, examples and a GitHub repository already exist. That accelerates third-party integration but also creates a community responsibility to report issues and contribute fixes.^2

Expert perspectives (synthesis)

Across official announcements and early technical write-ups, common themes emerge:

- Enthusiasm for reducing friction: practitioners value the way MCP turns “download-and-clean” into “query-and-use.” ^1

- Caution on governance: analysts urge layered access controls and careful rollout of microdata to avoid privacy harm.

- Open-source as trust-building: publishing implementation code and docs helps independent auditors and civic technologists verify behaviour and suggest improvements.^2

Limitations and realistic expectations

- Beta means scope limits: only a small fraction of the full eSankhyiki catalogue is live today; broad coverage will take time.

- Not a substitute for careful modelling: an AI that consumes MoSPI data still needs robust modelling practices to avoid misinterpretation.

- Dependency management: third parties should plan for endpoint changes, versioning and local caching strategies for critical workflows.

Conclusion

I welcome MoSPI’s MCP pilot as a pragmatic step toward making sovereign data AI-ready. The technical design and open-source posture create an opportunity: to build tools that are both powerful and accountable. But achieving that balance requires the right governance controls, auditing, and a staged approach to dataset expansion.

Call to action

- For policymakers: formalize data classification, access tiers and auditing requirements before onboarding sensitive datasets.

- For technologists: test connectors, file issues, and contribute to the public repo—help harden the implementation.

- For civil society: advocate for transparent logs, provenance in AI outputs, and clear redress channels.

If we get these governance pieces right, MCP servers can become a foundational interface—like APIs for money or identity—that lets AI add value to public life without eroding trust.

Regards,

Hemen Parekh

Any questions / doubts / clarifications regarding this blog? Just ask (by typing or talking) my Virtual Avatar on the website embedded below. Then "Share" that to your friend on WhatsApp.

Sources

- MoSPI / NSO press release on the MCP beta (eSankhyiki) [PIB release].^1

- Public GitHub repository and developer write-ups for the eSankhyiki MCP pilot.^2

Get correct answer to any question asked by Shri Amitabh Bachchan on Kaun Banega Crorepati, faster than any contestant

Hello Candidates :

- For UPSC – IAS – IPS – IFS etc., exams, you must prepare to answer, essay type questions which test your General Knowledge / Sensitivity of current events

- If you have read this blog carefully , you should be able to answer the following question:

- Need help ? No problem . Following are two AI AGENTS where we have PRE-LOADED this question in their respective Question Boxes . All that you have to do is just click SUBMIT

- www.HemenParekh.ai { a SLM , powered by my own Digital Content of more than 50,000 + documents, written by me over past 60 years of my professional career }

- www.IndiaAGI.ai { a consortium of 3 LLMs which debate and deliver a CONSENSUS answer – and each gives its own answer as well ! }

- It is up to you to decide which answer is more comprehensive / nuanced ( For sheer amazement, click both SUBMIT buttons quickly, one after another ) Then share any answer with yourself / your friends ( using WhatsApp / Email ). Nothing stops you from submitting ( just copy / paste from your resource ), all those questions from last year’s UPSC exam paper as well !

- May be there are other online resources which too provide you answers to UPSC “ General Knowledge “ questions but only I provide you in 26 languages !

No comments:

Post a Comment