Summary: what happened (the Grok row)

In recent weeks the UK government moved to close a regulatory gap exposed by the so‑called "Grok" episode. Grok — an AI chatbot hosted on the social platform X and developed by xAI — was used by some users to generate highly sexualised and non‑consensual deepfake images of real people, including children. The incident prompted a swift public backlash, an Ofcom investigation into X’s compliance with the Online Safety Act, and a political response that called for AI chatbots to be brought explicitly within online safety rules.

The immediate consequence was a policy pivot: regulators and ministers signalled that chatbots which previously sat outside the scope of some duties would now face obligations to prevent the generation and distribution of illegal or harmful content.

The change being proposed: chatbots in the Online Safety Act

Broadly, the UK’s move is twofold:

- Extend the Online Safety Act’s illegal content duties so they explicitly apply to AI chatbots and generative models, not just platforms that facilitate user‑to‑user sharing.

- Hold chatbot providers responsible for reasonable mitigations against generation of illegal content (for example non‑consensual intimate images, CSAM, hate content) and for implementing age and identity checks where appropriate.

In practice this could mean new obligations for product design (safer defaults, content filters, audit trails), compliance processes (impact assessments, red‑teaming results), and enforcement mechanisms (fines, blocking orders or other sanctions) when providers fail to take appropriate steps.

Potential impact on AI companies and users

For AI companies

- Compliance costs will rise. Smaller providers may need to invest in moderation pipelines, human review, and evidence retention to meet statutory duties.

- Product design incentives will shift toward conservative outputs and stronger guardrails; some features (image generation from arbitrary prompts, unfettered person‑based nudification) may be restricted or removed for UK users.

- Business models that monetised open generation or limited safety controls could face legal and reputational risk.

For users

- Safer baseline protections for vulnerable groups, especially children and victims of abuse.

- Potential reduction in creative freedom or convenience where models refuse to comply with risky prompts.

- Uneven experience across jurisdictions: a chatbot may behave differently in the UK compared with the EU or US depending on local rules.

How this compares to other frameworks (UK, EU, US)

UK: The Online Safety Act already imposes duties on platforms to prevent illegal content. The proposed change is an extension of those duties to include AI chatbots explicitly — closing a loophole for standalone generative services that do not primarily act as user‑to‑user sharing platforms.

EU: The EU’s AI Act focuses on risk‑based classification of AI systems and places obligations on high‑risk systems (transparency, conformity assessments). It also has specific provisions for certain applications. The UK approach is complementary but more immediate in targeting illegal content and child protection through an established safety law rather than through a new product‑safety style regime.

US: Regulation remains sectoral and fragmented, with agencies (FTC, DOJ, state attorneys general) using existing consumer‑protection, privacy and criminal laws. There is no uniform federal duty specifically for AI chatbots yet; the UK’s move is therefore more prescriptive and targeted in the short term.

Expert perspectives (representative, realistic views)

An independent AI policy researcher I spoke with observed: "Extending illegal content duties to chatbots is a necessary corrective. Generative models are not neutral tools — they produce outputs that can cause real harm, and law should reflect that reality."

A compliance lead at a mid‑sized AI firm commented: "We welcome clarity on duties, but the devil is in the detail — what counts as 'reasonable steps' to prevent harm, and how will regulators assess compliance without stifling innovation?"

A civil‑society advocate said: "This could be a turning point for victim protection online — but enforcement and transparency are key. If providers quietly gatekeep content without oversight, new harms can arise."

(These quotes are representative and paraphrased to reflect typical stakeholder positions.)

Challenges and practical trade‑offs

- Definitional difficulties: what precisely is a regulated "chatbot"? Many services blend assistant features with social functionality.

- Technical limits: content‑safety is probabilistic. Filters reduce but do not eliminate harmful outputs and may produce false positives that frustrate legitimate users.

- Jurisdictional mismatch: global models and cross‑border services will face conflicting legal duties; compliance complexity is high.

- Enforcement burden: regulators will need technical expertise and resources to assess sophisticated models, red‑teaming results, and platform logs.

Next steps I expect (and what to watch for)

- Draft legislative or secondary‑law language to specify obligations for generative AI and chatbots tied to the Online Safety Act.

- Ofcom and data regulators publishing technical guidance on mitigation steps, impact assessments, and record‑keeping duties.

- Industry engagement: sandboxes, compliance codes, voluntary standards and certification schemes to demonstrate "reasonable steps."

- Legal challenges and litigation testing the scope of duties, particularly for novel models and cross‑border services.

Why this matters: governance implications

This is not only a reaction to one scandal. It signals a broader evolution: governments are treating generative AI as an operational product that must carry legal responsibilities — similar to how media platforms, financial services or medical devices carry duties. In my own earlier writing I argued for clear, embedded principles for chatbots and meaningful human feedback mechanisms (what I called Parekh’s Law of Chatbots). That framework emphasises design controls, human review, and accountability — themes that resonate with the UK’s current direction.

Conclusion: the future of AI governance

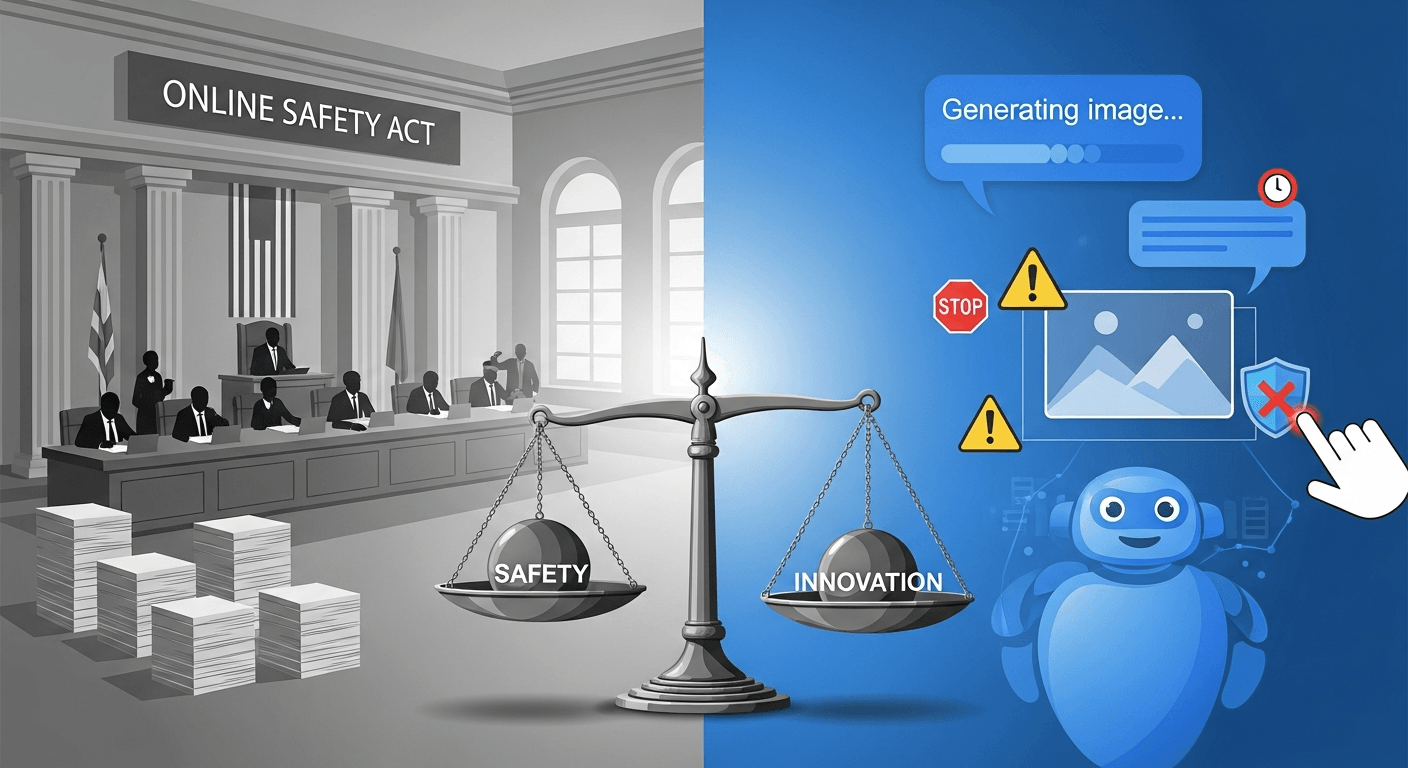

The UK’s move to fold chatbots into online safety laws is a pragmatic step to close a visible gap and protect victims. It will accelerate a global policy conversation about how to allocate responsibility between developers, platforms and users. Expect a period of regulatory experimentation: clearer duties, heavier compliance for providers, and likely pushback on technical feasibility and international scope. Ultimately, successful governance will balance harm reduction with room for innovation — and that balance will be negotiated in law, policy guidance and public debate over the months ahead.

Regards,

Hemen Parekh

Any questions / doubts / clarifications regarding this blog? Just ask (by typing or talking) my Virtual Avatar on the website embedded below. Then "Share" that to your friend on WhatsApp.

Get correct answer to any question asked by Shri Amitabh Bachchan on Kaun Banega Crorepati, faster than any contestant

Hello Candidates :

- For UPSC – IAS – IPS – IFS etc., exams, you must prepare to answer, essay type questions which test your General Knowledge / Sensitivity of current events

- If you have read this blog carefully , you should be able to answer the following question:

- Need help ? No problem . Following are two AI AGENTS where we have PRE-LOADED this question in their respective Question Boxes . All that you have to do is just click SUBMIT

- www.HemenParekh.ai { a SLM , powered by my own Digital Content of more than 50,000 + documents, written by me over past 60 years of my professional career }

- www.IndiaAGI.ai { a consortium of 3 LLMs which debate and deliver a CONSENSUS answer – and each gives its own answer as well ! }

- It is up to you to decide which answer is more comprehensive / nuanced ( For sheer amazement, click both SUBMIT buttons quickly, one after another ) Then share any answer with yourself / your friends ( using WhatsApp / Email ). Nothing stops you from submitting ( just copy / paste from your resource ), all those questions from last year’s UPSC exam paper as well !

- May be there are other online resources which too provide you answers to UPSC “ General Knowledge “ questions but only I provide you in 26 languages !

No comments:

Post a Comment